Updated February 2025: Stop getting error messages and slow down your system with our optimization tool. Get it now at this link

- Download and install the repair tool here.

- Let it scan your computer.

- The tool will then repair your computer.

For reasons I never understood, the Amazon AWS S3 object file store does not provide metadata on the size and number of objects in a bucket. This means that in order to answer the simple question “How do I get the total size of an S3 bucket”, the bucket must be scanned to count the objects and determine the total size. This is slow, especially when you have millions of objects in a bucket.

For AWS, finding the size of an S3 bucket is not very intuitive and hidden in the menus. Learn how to find the total size, display it graphically in CloudWatch or retrieve it programmatically from the command line.

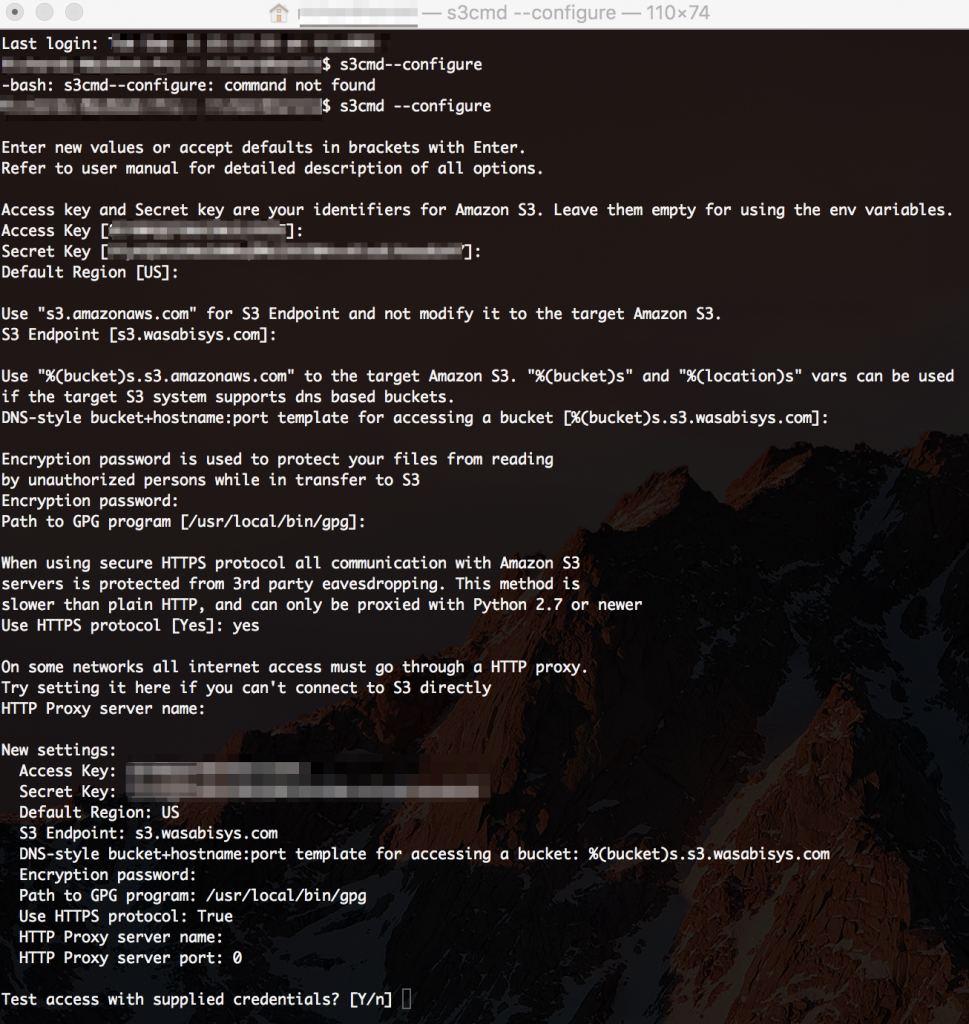

How to Get the Size of an Amazon S3 Bucket using the CLI

AWS CLI now supports the –query parameter, which takes a JMESPath expression.

This means that you can sum the size values specified by the objects in the list with sum(Contents[].Size) and count as length(Contents[]).

This can be done using the official AWS CLI as follows and was introduced in February 2014

aws s3api list-objects –bucket BUCKETNAME –output json –query “[sum(Contents[].Size), length(Contents[])]”

February 2025 Update:

You can now prevent PC problems by using this tool, such as protecting you against file loss and malware. Additionally, it is a great way to optimize your computer for maximum performance. The program fixes common errors that might occur on Windows systems with ease - no need for hours of troubleshooting when you have the perfect solution at your fingertips:

- Step 1 : Download PC Repair & Optimizer Tool (Windows 10, 8, 7, XP, Vista – Microsoft Gold Certified).

- Step 2 : Click “Start Scan” to find Windows registry issues that could be causing PC problems.

- Step 3 : Click “Repair All” to fix all issues.

or

This can now be done trivially with the official AWS command line client alone:

aws s3 ls –summarize –human-readable –recursive s3://bucket-name/

It also accepts path prefixes if you don’t want to count the whole range:

aws s3 ls –summarize –human-readable –recursive s3://bucket-name/directory

Thus, the size of large memory areas is displayed much faster than by recursively adding up file sizes, because the memory space actually used is recalled. It is also human-readable if you pass the -H flag, so you don’t need to take your calculator out.

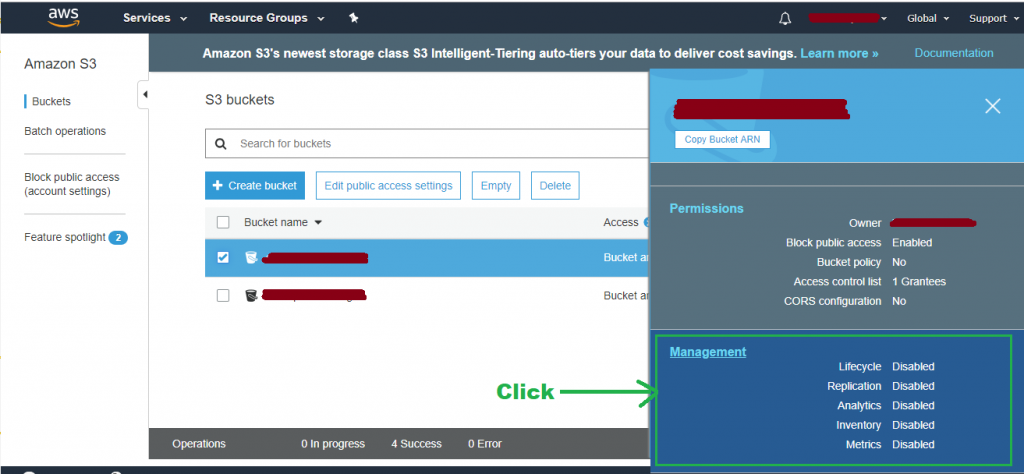

Using the AWS Web Console and Cloudwatch

- Go to CloudWatch

- Click on the measurements on the left side of the screen

- Click on S3

- Click on “Save

- You’ll see a list of all the buckets. Note that there are two possible points of confusion here:

- a. You will only see buckets containing at least one object.

- b. You may not see buckets that have been created in another region and you may have to change regions using the drop-down menu in the top right-hand corner to see additional buckets.

- Look for the word “StandardStorage” in the “Search for a metric, dimension or resource identifier” box.

- Select the areas (or all areas with the check box to the left under the word “All”) for which you want to calculate the total size.

- Select at least 3d (3 days) or more in the timeline at the top right of the screen.

You will now see a graph showing the daily size (or other unit) of the list of all the selected buckets over the selected period.

Expert Tip: This repair tool scans the repositories and replaces corrupt or missing files if none of these methods have worked. It works well in most cases where the problem is due to system corruption. This tool will also optimize your system to maximize performance. It can be downloaded by Clicking Here